Choose a topology

A YugabyteDB cluster consists of three or more nodes that communicate with each other and across which data is distributed. You can place the nodes of a YugabyteDB cluster across different zones in a single region, and across regions. The topology you choose depends on your requirements for latency, availability, and geo-distribution:

- Moving data closer to where end-users are enables lower latency access.

- Distributing data can make the data service resilient to zone and region outages in the cloud.

- Geo-partitioning can keep user data in a particular geographic region to comply with data sovereignty regulations.

YugabyteDB Aeon offers a number of deployment and replication options in geo-distributed environments to achieve resilience, performance, and compliance objectives.

| Type | Consistency | Read Latency | Write Latency | Best For |

|---|---|---|---|---|

| Multi zone | Strong | Low in region (1-10ms) | Low in region (1-10ms) | Zone-level resilience |

| Replicate across regions | Strong | High with strong consistency or low with eventual consistency | Depends on inter-region distances | Region-level resilience |

| Partition by region | Strong | Low in region (1-10ms); high across regions (40-100ms) | Low in region (1-10ms); high across regions (40-100ms) | Compliance, low latency I/O by moving data closer to customers |

| Read replica | Strong in primary, eventual in replica | Low in region (1-10ms) | Low in primary region (1-10ms) | Low latency reads |

For more information on replication and deployment strategies for YugabyteDB, see the following:

- DocDB replication layer

- Multi-region deployments

- Engineering Around the Physics of Latency

- 9 Techniques to Build Cloud-Native, Geo-Distributed SQL Apps with Low Latency

- Geo-partitioning of Data in YugabyteDB

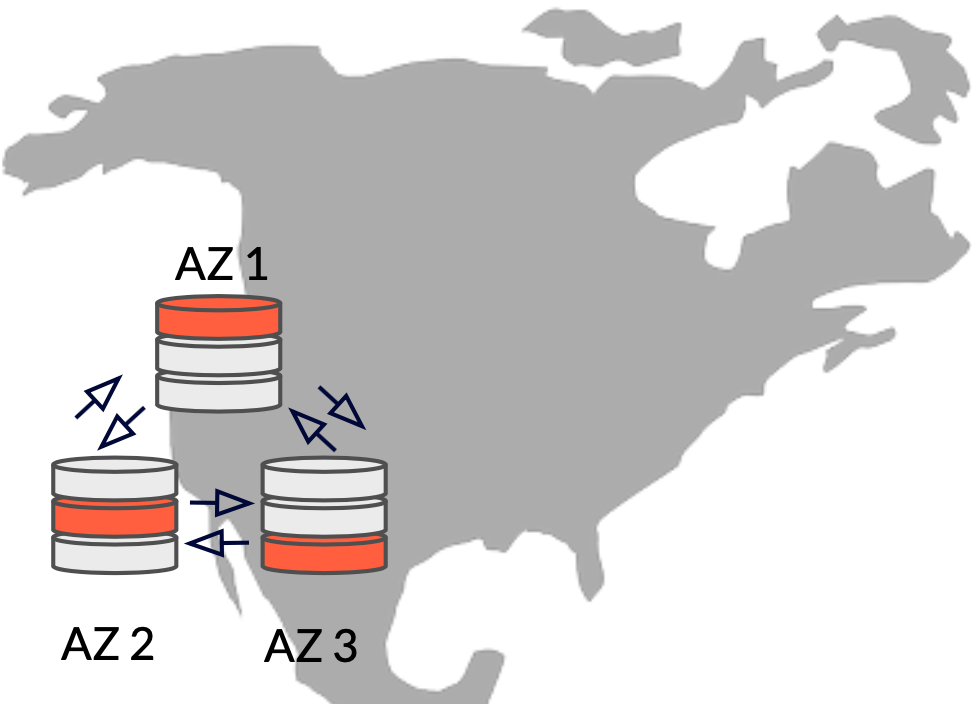

Single region multi-zone cluster

In a single-region multi-zone cluster, the nodes of the YugabyteDB cluster are placed across different availability zones in a single region.

Resilience: Cloud providers design zones to minimize the risk of correlated failures caused by physical infrastructure outages like power, cooling, or networking. In other words, single failure events usually affect only a single zone. By deploying nodes across zones in a region, you get resilience to a zone failure as well as high availability.

Consistency: YugabyteDB automatically shards the tables of the database, places the data across the nodes, and replicates all writes synchronously. The cluster ensures strong consistency of all I/O and distributed transactions.

Latency: Because the zones are close to each other, you get low read and write latencies for clients located in the same region as the cluster, typically 1 – 10ms latency for basic row access.

Strengths

- Strong consistency

- Resilience and high availability (HA) – zero recovery point objective (RPO) and near zero recovery time objective (RTO)

- Clients in the same region get low read and write latency

Tradeoffs

- Applications accessing data from remote regions may experience higher read/write latencies

- Not resilient to region-level outages, such as those caused by natural disasters like floods or ice storms

Fault tolerance

Availability Zone Level, with a minimum of 3 nodes across 3 availability zones in a single region.

Deployment

To deploy a multi-zone cluster, create a single-region cluster with Availability Zone Level fault tolerance. Refer to Create a single-region cluster.

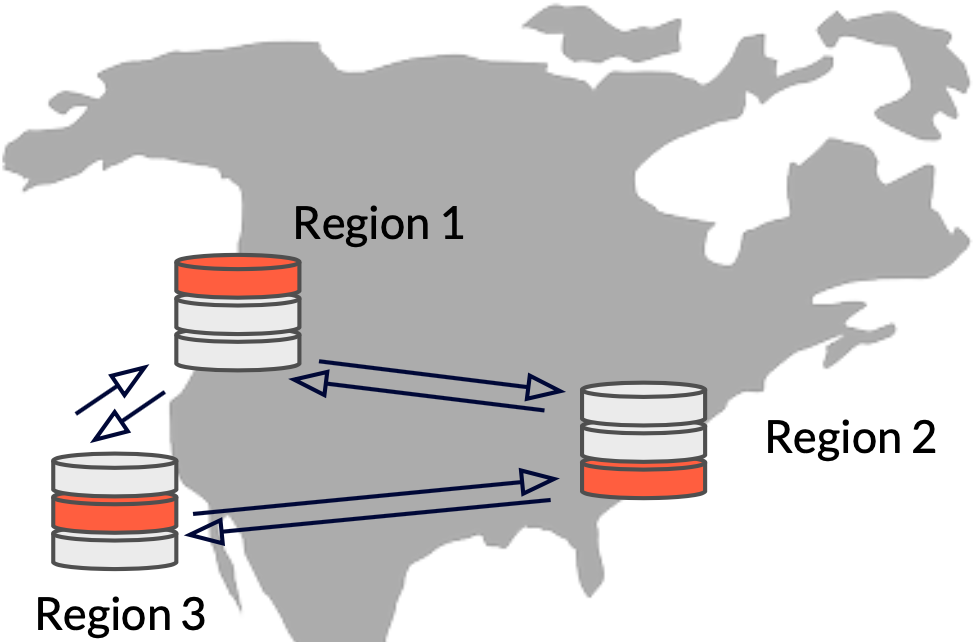

Replicate across regions

In a cluster that is replicated across regions, the nodes of the cluster are deployed in different regions rather than in different availability zones of the same region.

Resilience: Putting cluster nodes in different regions provides a higher degree of failure independence. In the event of a region failure, the database cluster continues to serve data requests from the remaining regions. YugabyteDB automatically performs a failover to the nodes in the other two regions, and the tablets being failed over are evenly distributed across the two remaining regions.

Consistency: All writes are synchronously replicated. Transactions are globally consistent.

Latency: Latency in a multi-region cluster depends on the distance and network packet transfer times between the nodes of the cluster and between the cluster and the client. Write latencies in this deployment mode can be high. This is because the tablet leader replicates write operations across a majority of tablet peers before sending a response to the client. All writes involve cross-zone communication between tablet peers.

As a mitigation, you can enable follower reads and set a preferred region.

Strengths

- Resilience and HA – zero RPO and near zero RTO

- Strong consistency of writes, tunable consistency of reads

Tradeoffs

- Write latency can be high (depends on the distance/network packet transfer times)

- Follower reads trade off consistency for latency

Fault tolerance

Region Level, with a minimum of 3 nodes across 3 regions.

Deployment

To deploy a multi-region replicated cluster, refer to Replicate across regions.

Learn more

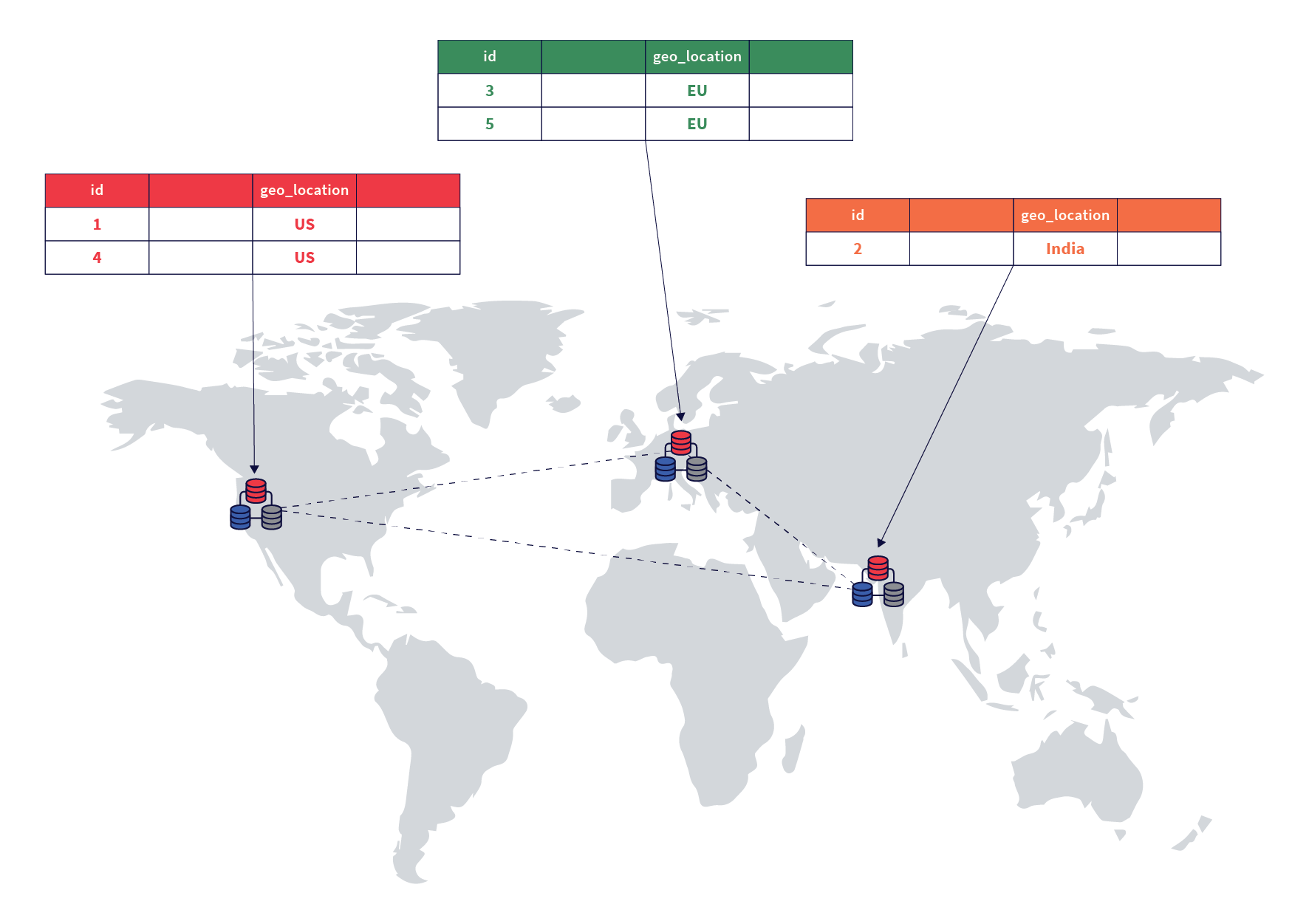

Partition by region

Applications that need to keep user data in a particular geographic region to comply with data sovereignty regulations can use row-level geo-partitioning in YugabyteDB. This feature allows fine-grained control over pinning rows in a user table to specific geographic locations.

Here's how it works:

-

Pick a column of the table that will be used as the partition column. The value of this column could be the country or geographic name in a user table for example.

-

Next, create partition tables based on the partition column of the original table. You will end up with a partition table for each region that you want to pin data to.

-

Finally pin each table so the data lives in different zones of the target region.

With this deployment mode, the cluster automatically keeps specific rows and all the table shards (known as tablets) in the specified region. In addition to complying with data sovereignty requirements, you also get low-latency access to data from users in the region while maintaining transactional consistency semantics.

In YugabyteDB Aeon, a partition-by-region cluster consists initially of a primary region where all tables that aren't geo-partitioned (that is, don't reside in a tablespace) reside, and any number of additional regions where you can store partitioned data, whether it's to reduce latencies or comply with data sovereignty requirements. Tablespaces are automatically placed in all the regions.

Resilience: Clusters with geo-partitioned tables are resilient to zone-level failures when the nodes in each region are deployed in different zones of the region.

Consistency: Because this deployment model has a single cluster that is spread out across multiple geographies, all writes are synchronously replicated to nodes in different zones of the same region, thus maintaining strong consistency.

Latency: Because all the tablet replicas are pinned to zones in a single region, read and write overhead is minimal and latency is low. To insert rows or make updates to rows pinned to a particular region, the cluster needs to touch only tablet replicas in the same region.

Strengths

- Tables that have data that needs to be pinned to specific geographic regions to meet data sovereignty requirements

- Low latency reads and writes in the region where the data resides

- Strongly consistent reads and writes

Tradeoffs

- Row-level geo-partitioning is helpful for specific use cases where the dataset and access to the data is logically partitioned. Examples include users in different countries accessing their accounts, and localized products (or product inventory) in a product catalog.

- When users travel, access to their data will incur cross-region latency because their data is pinned to a different region.

Fault tolerance

The regions in the cluster can have fault tolerance of None (single node, no HA), Node Level (minimum 3 nodes in a single availability zone), or Availability Zone Level (minimum 3 nodes in 3 availability zones; recommended). Any regions you add to the cluster have the same fault tolerance as the primary region.

Deployment

To deploy a partition-by-region cluster, refer to Partition by region.

Learn more

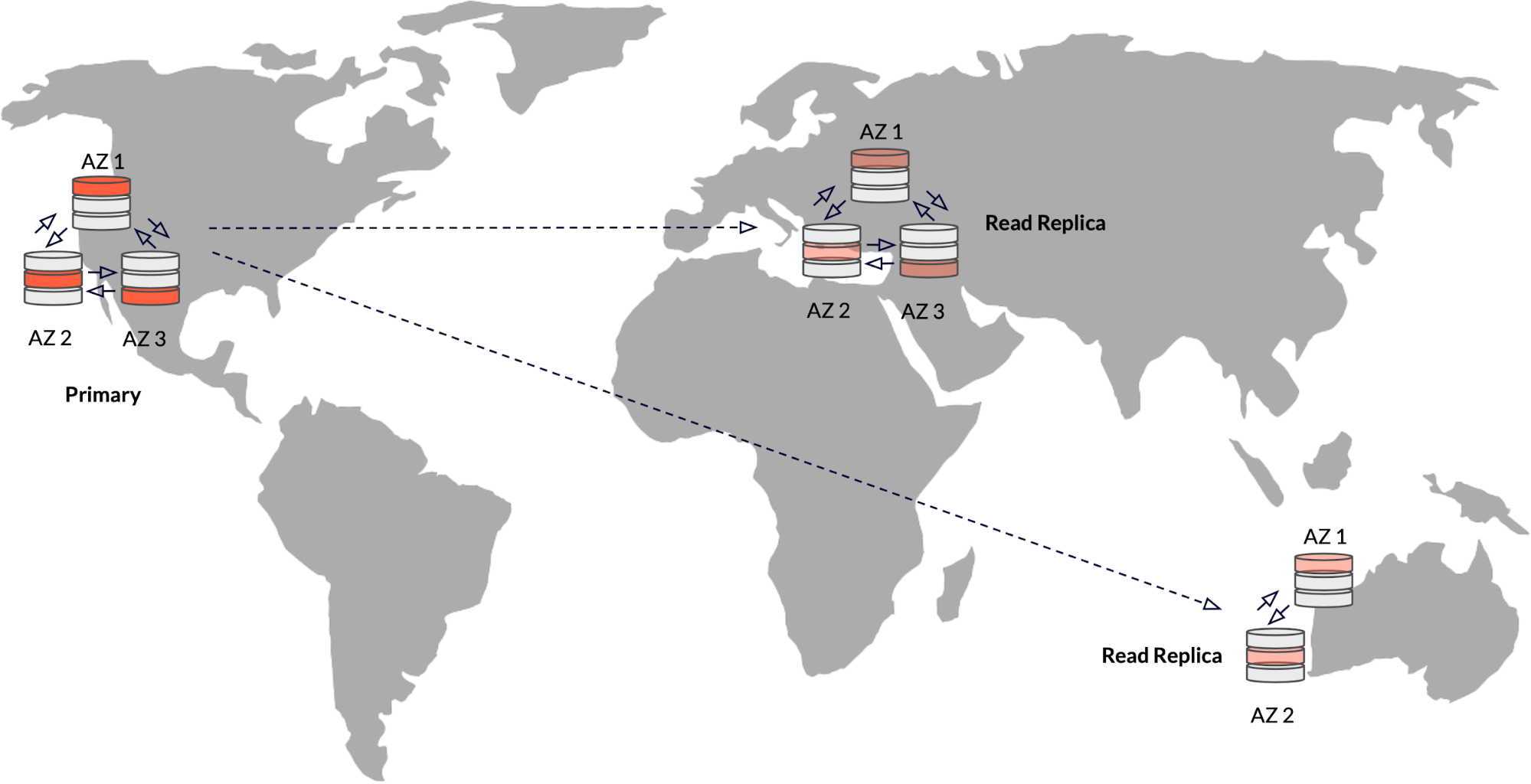

Read replicas

For applications that have writes happening from a single zone or region but want to serve read requests from multiple remote regions, you can use read replicas. Data from the primary cluster is automatically replicated asynchronously to one or more read replica clusters. The primary cluster gets all write requests, while read requests can go either to the primary cluster or to the read replica clusters depending on which is closest. To read data from a read replica, you enable follower reads for the cluster.

Resilience: If you deploy the nodes of the primary cluster across zones or regions, you get zone- or region-level resilience. Read replicas don't participate in the Raft consistency protocol and therefore don't affect resilience.

Consistency: The data in the replica clusters is timeline consistent, which is better than eventual consistency.

Latency: Reads from both the primary cluster and read replicas can be fast (single digit millisecond latency) because read replicas can serve timeline consistent reads without having to go to the tablet leader in the primary cluster. Read replicas don't handle write requests; these are redirected to the primary cluster. So the write latency will depend on the distance between the client and the primary cluster.

Strengths

- Fast, timeline-consistent reads from replicas

- Strongly consistent reads and writes to the primary cluster

- Low latency writes in the primary region

Tradeoffs

- The primary cluster and the read replicas are correlated clusters, not two independent clusters. In other words, adding read replicas doesn't improve resilience.

- Read replicas can't take writes, so write latency from remote regions can be high even if there is a read replica near the client.

Fault tolerance

Read replicas have a minimum of 1 node. Adding nodes to the read replica increases the replication factor (that is, adds copies of the data) to protect the read replica from node failure.

Deployment

You can add replicas to an existing primary cluster as needed. Refer to Read replicas.

Learn more